Reading and Writing Files Using Pandas

The basic Python provides good set of classes and functions for reading and writing to the file system. However a Data Analyst will rarely get to use those functions, especially if you are mostly dealing with table data as Pandas directly hands you a DataFrame from a file which is in table format.

In this lesson you will see some examples. Before we dive into reading files, let us understand the most common file formats:

- csv - comma separated values

- xml - eXtensive Markup Language

- json - JavaScript Object Notation

Construct a DataFrame using the contents of the file

For all tabular data where a DataFrame data structure is more convenient to manipulate, you can use any of the below methods based on the file format.

Reading a CSV file

The most common file format is a csv; which stands for comma separated values. In this file all the column values are separated by a comma and an optional header is present in some files in which case the column labels are separated by comma. Download the Titanic dataset from here: https://storage.googleapis.com/mbcc/titanic.csv

Open the downloaded file using some kind of text editor. You will see all the columns are separated by a comma. A row ends in a new line character. Hence every row has its own line.

The above dataset which you have been working with is part of Seaborn so you did not have to download to your machine. If you want to work with this downloaded file you would invoke the below command:

import pandas as pd

titanic_df = pd.read_csv('datasets/titanic.csv')

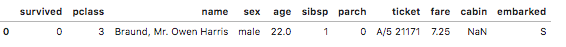

titanic_df.head(1)

Output:

The head(1) method used on the DataFrame object titanicdf, returns the first row of the dataframe. You will learn more on _head in the next lesson.

Ensure that the downloaded file is moved to 'datasets' folder. If you do not have one, then create a 'datasets' folder as a child of the folder in which your .ipynb file is present.

You can also create the dataframe by directly invoking the csv file from its source. Here is the code to do just that:

titanic_df = pd.read_csv('https://storage.googleapis.com/mbcc/titanic.csv')

Titanic Column Information

If you are curious about what the column labels and the values mean in this dataset, here is the rundown on that:

- survival - Survival (0 = No; 1 = Yes)

- class - Passenger Class (1 = 1st; 2 = 2nd; 3 = 3rd)

- name - Passenger Name

- sex - Passenger Gender

- age - Age of the Passenger

- sibsp - Number of Siblings/Spouses Aboard with the Passenger

- parch - Number of Parents/Children Aboard with the Passenger

- ticket - Ticket Number

- fare - Passenger Fare

- cabin - Cabin

- embarked - Port of Embarkation (C = Cherbourg; Q = Queenstown; S = Southampton)

Dump the contents of a DataFrame to a file

You can conveniently save the contents of the DataFrame into a file on the filesystem using one of the below methods depending upon the type of file format to use.

Writing to a CSV file

To write a DataFrame to a CSV file, all you have to do is invoke to_csv function on the dataset by giving the file path and voila! a new file is created from the contents of a DataFrame Here is an example of getting all the records of travellers who are above 50 years to a separate file:

above50 = titanic_df[titanic_df.age > 50]

above50.to_csv("datasets/above50.csv", index=False)

Output: There is output on the notebook but a file by name above50.csv would be created in the 'datasets' folder without the index column.

Reading a JSON file

To read a JSON file format, all you have to do is use 'read_json' instead of 'read_csv'. Here is an example:

df = pd.read_json("https://storage.googleapis.com/mbcc/datasets/cdc_smoking.json")

print(df.shape)

Output:

(55, 9)

From the shape output we can see that this dataset has 55 records and 9 columns.

Other file format

While CSV and JSON are the most commonly used file formats, Pandas provides functions for other file formats too; HTML, XML, Relational databases etc.. Refer the official documentation for details: https://pandas.pydata.org/pandas-docs/stable/io.html

Construct a Dictionary object using the contents of a file in JSON format

You can convert a JSON file content into a dictionary object using the json module. Here is an example

from urllib.request import urlopen

import json

my_dictionary = json.load(urlopen('https://raw.githubusercontent.com/plotly/datasets/master/geojson-counties-fips.json'))

type(my_dictionary)

You will see that my_dictionary is a dictionary object.

urllib library provides the urlopen function to read a file, given the URL of the file. This returns the http.client.HTTPResponse stream which is in turn fed to json_load function, that converts the JSON read from the response object into a Python dictionary object.

In case of exceptional conditions, you need to close the opened resources like the HttpResponse using a try-catch block. Python also provides a convenient with statement which handles the opened resources automatically. Here is a better implementation of the above function

with urlopen('https://raw.githubusercontent.com/plotly/datasets/master/geojson-counties-fips.json') as response:

print(type(response))

my_dictionary = json.load(response)

Construct a Dictionary object using string, byte or bytearray that represents a JSON

There is another convenience method loads, that can convert a String, bytes object or bytearray array into a dictionary. Here is an example

learning_center = '{ "school":"mbcc", "country":"USA", "city":"Troy"}'

my_school = json.loads(learning_center)

type(my_school)

In this example, learningcenter is a JSON string that is converted to a dictionary using the _loads function.

The complementary function to convert a dictionary to a JSON string is dumps and here is an example

my_dictionary = {"school":"mbcc", "country":"USA", "city":"Troy"}

my_school = json.dumps(my_dictionary)

type(my_school)

You will see that my_school is a JSON string.